[tweetmeme source=”jeric40” https://optimizeyourdataquality.wordpress.com%5D

Sales people are the ones who complain most about poor data quality and at the same time probably the ones who create most of the dirty data. 76% of the dirty data is created in the data entry phase. Why not make it easier by introducing some error tolerance in their CRM/ERP Search, Data Quality Firewall, Online Registration and in the data cleansing procedures?

Why is dirty data created?

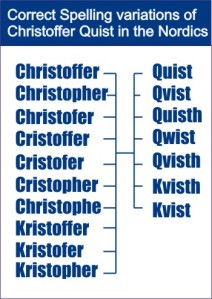

There can be multiple correct spellings of a name

Let’s say your customer Christopher Quist calls you? I have gone through the name statistics in the Nordics. There are 10 ways that Christopher is spelled and 7 ways Quist is spelled. This means there are 70 possible correct ways to write his name!

How big chance is it that the customer care or sales representative hits the correct form? It can be unprofessional to ask Christopher many times, it is time consuming and irritating. With an error tolerant search – the representative would find it immediately.

People hear differently.

I used to work at the Nordic call center for Dell in Denmark. I would hear and spell a name differently than the Danes. The most common way to write Christopher Quist in Norway would be Kristoffer Kvist and in Denmark it would be Christoffer Qvist. In the Nordic Call Centers it is not uncommon to answer telephones from another country, and therefore the chances of “listening” mistakes grow.

People do typos.

In the entering process it is easy to skip a letter, do double lettering, reverse letters, skip spaces, miss the key and hit the one beside it, or insert the key beside the one you hit. If we do all these plausible typos with the most Common way to write Christoffer Qvist in Danish – it would generate 314 ways of entering the name! The Norwegian version of Kristoffer Kvist would generate 293 plausible typos!

Sloppiness

Sometimes people believe it is easier or safer to just enter the data again.

Other mistakes error tolerance covers

- Information written in the wrong field (contact name in the company field)

- Information is left out (Miller Furniture vs Millers House of Furniture)

- Abbreviations (Chr. Andersen vs Christian Andersen)

- Switch the order of the words (Energiselskabet Buskerud –Buskerud Energiselskab)

- Word Mutations (Miller Direct Marketing – Müller Direct &

Dialogue Marketing)

What will the result be for you if you have error tolerance?

- Cost reduction – if you have a call center of 100 persons and they would save 20 seconds for each call. They could start immediately serving the customer, instead of making the customer spell their names.

- Happy customers – it is annoying to always have to spell out the information to a sales representative if you want to buy something.

- Happy workers – it is annoying trying to find a customer you know is in the system – but cannot find! You spoil valuable selling time

Introduce true error tolerance today!

Filed under: CRM - ERP, General, Tips to improve Return of Investment (ROI) | Tagged: Call Center, CRM Search, Dirty Data, Error tolerance, Poor Data | 4 Comments »