[tweetmeme source=”jeric40” http:// optimizeyourdataquality.wordpress.com]

In a project with an international vendor some years ago, I introduced the concept of splitting the Data Quality Firewall (DQF) in a Frontend and Backend Data Firewall. These terms are spreading and I get question on how you should set up the Frontend DQF. Last query was just this week via Twitter. My focus is not on the technical side, but the usability and reward for operatives and companies.

Why is the Frontend DQF important?

I participated in the Information Quality Conference in London, where it was stated that 76% of poor data is created in the data entry phase. Be proactive in the data entry phase, instead of being reactive (sometime, if ever) later will help you a long way to good and clean data.

Elements of the Frontend DQF.

First identify in which systems data are created. It may be in a variety of systems like CRM, ERP, Logistics, Booking, Customer Care just to mention a few.

Error tolerant intelligent search in Data Entry systems.

Operatives have been taught by Google and other search engines to go directly to the search box to find information. When you search in Customer Entry systems, it is very often you do not find the customer. In order to this you need error tolerance and intelligence in your search functionality, as well as the suggestion feature. This will help you find the entry despite of typos, different spellings, hearing differences and sloppiness. This will be the biggest contributor to cleaner data. A spinoff is higher employee and customer satisfaction due to more efficient work.

If you want to learn more about error tolerance and intelligent search, read these posts:

Making the case of error tolerance in Customer Data Quality

Is Google paving the way for actual CRM/ERP Search?

Checklist for search in CRM/ERP systems.

Data Correction Registration Module

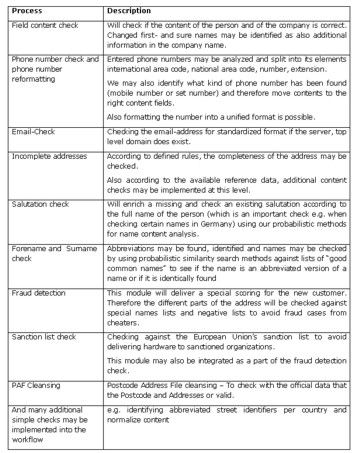

If you did not find the customer and the operatives have to enter the data, you have to make sure the data entered is accurate. You can install a module or workflows that checks and correct the information.

Check against address vendors

If you have a subscription with an address vendor, you can send the query to them, and they can supply you with the most recently updated data. You can set up so it is easy for the operative, and the data will be correctly formatted to your systems.

This is quite easy for one country. If you are an international company, the laws and regulations are different from country to country. In addition the price can run up if you want local address vendors in several countries. It is important that you registration module can communicate with the local vendors, then format and make the entry correctly into your database(s)

Correct the Data Formats

You might choose not to subscribe to online verification by an address vendor. There are still many checks you can do in the data entry phase. You can check:

– is the domain of the e-mail valid?

– is the format of the telephone number correct?

– is the mobile number really a mobile number?

– is the salutation correct?

– is the format of the address correct?

– is the gender correct?

Check for unwanted scam and fraud

You can check against:

– internal black lists

– sanction lists

– “Non Real Life Subjects”

Duplicate check

Even though duplicates should have been found in the search, you should do an additional duplicate check, when the entry is done.

If you incorporate these solutions, you should be able to control that the data you enter is clean and correct. It should be possible to get it from one vendor. Then you can use the Backend DQF to ensure the cleansing of detoriating existing data.

Filed under: CRM - ERP, Data Quality Firewall | Tagged: Address Vendor, Backend Data Quality Firewall, CRM Search, Data Quality, Data Quality Firewall, Frontend Data Quality Firewall, Registration | Leave a comment »